In today's tutorial, you will learn how to optimally call data from Umbraco CMS. I will explain how things work by telling you how I miserably failed to architect my first Umbraco website 😢. I'm going to cover some of the learning experiences I made with the Umbraco API and how I overcame them. My first experience of using Umbraco was migrating a website to use Umbraco CMS. I built a site locally and everything worked great. Before I launched the website, I used a great tool called Mini Profiler to check that the website's web pages worked performantly. If you haven't come across MiniProfiler yet, I suggest you read, How To Debug Your Umbraco 7 Website.

Umbraco Performance Issues

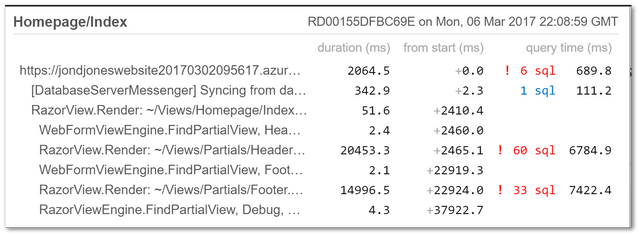

You can see the mini-profiler results above. In my header, I was somehow making 60 repeat SQL calls. At the time I was not aware of this, however, the first thing you should do when working with Umbraco is making sure you're using the right API for the job. Umbraco you can access data in two different ways, one with IContent and one with IPublishedContent

IContent: This allows for reading/write access to your Umbraco data. You may also need to use IContent if you need to access your content from outside of a Umbraco context, however, this is a very niche requirement.

IPublishedContent: This is a much faster way to access your Umbraco data in a read-only mode. The API interacts with the Umbraco cache and consequently is much quicker compared to the much slower content service database query approach!

If you find your page is making lots of SQL calls, first check which API you are using. Umbraco database calls are slow so when you're trying to display data on your website, it is recommended to work with IPublishedCOntent rather than accessing the database for each request.

Get Descendants Vs Get Children

If you find your page is making lots of SQL calls, first check which API you are using. Umbraco database calls are slow. If you are trying to display data on your website, it is recommended to work with read-only APIs. You can tell you are working in read-only mode as the item returns will be of type IPublishedContent!

Get Descendants Vs Get Children

Using the correct API improved my page performance a lot, however, there was an additional cause of excessive CMS queries. The cause of this performance issue was also because I had written some code that was also non-optimal. The website used a mega-menu as the primary menu. If you are new to the term mega-menu, think of a menu that has a top-level item and then a number of sub-items. The first way I was getting the menu items was using GetChildren(). I used GetChildren() to get the top-level items and added them into a collection. After getting these items I then did some iteration over the collection to get their child as well. Again I used GetChildren() to get this extra data.

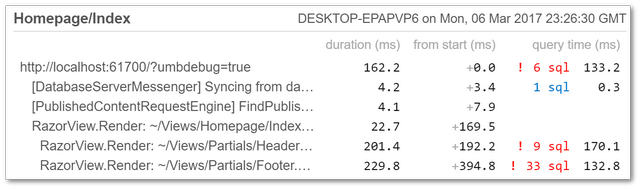

As you can see from the mini-profiler results, this was causing a lot of extra calls. The more calls your webpage has to make, the slower it will load. Instead of having to make a number of different calls to get the data I needed there was a better approach, GetDescendants(). GetDescendants() will get all the pages and their descendants. Using GetDescendants() allowed me to get all the menu data in one hit rather than many. As you can see from the comparisons, I saved over 50 SQL queries per request form using the API in a slightly different way. Instead of using GetChildren() numerous times, I could use GetDescendants() once and then parse all the data in code.

Caching

I'd refactored the code to removed 51 SQL requests. I still had 9 calls on the first-page load. To get around this I simply put all the data into the cache for an hour. This means once an hour one request needed to do a little more work, however for most requests they would get a quicker experience. The code to cache objects is really simple:

The code to add items into the cache would look like this:

When you try to make your pages faster, there are usually one or two main pain points. In my example, it was the header that was making the page load slowly. When it comes to Umbraco make sure you are using IPublishedContent as in most instances will remove all SQL calls! Using the three techniques outlined above, I reduce my page from using 100 SQL queries on the first-page load, down to under 5. Using the correct API calls made my site more efficient. As I'm hoping you can see, using the wrong API, or, using the wrong method can add a lot of lag onto your page load time. To fix performance issues I recommend using the mini profiler tool to help you figure out the slowest parts of your page. Happy Coding 🤘