In this tutorial, you will learn how to export event data out of Optimizely Web and then import it into BigQuery. If you are looking for an easy way to start doing additional querying on your Optimizely experimentation data, this is the article for you 🔥🔥🔥

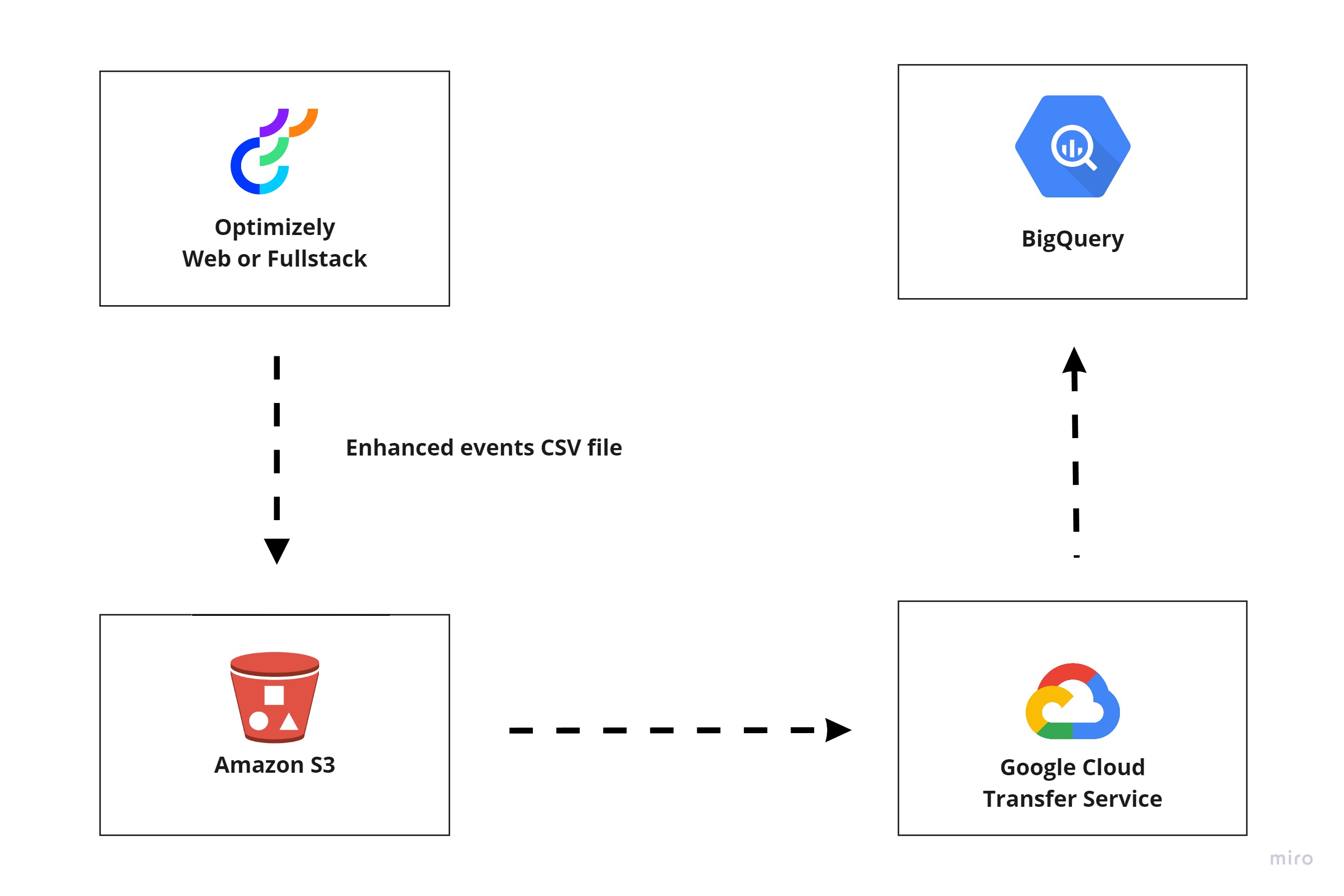

Importing data into BigQuery from Optimizely Web, or Fullstack is possible, however, as of writing, there is no one-click integration that magically makes the transfer just work. Instead, you will need to manually enable some things in Optimizely and Google Cloud in order to get the transfer working. The good news is that the set-up should be pretty quick and painless. The process can also be scheduled so this is a perform once type of set-up task. Below shows a high-level overview of the process:

To summarise this process, you will need to enable something within Optimizely called the enriched events export. Once the event export is enabled, all the captured event data along with other enriched data like attribution, session, and user segments will be copied into a CSV file that will get uploaded into AWS S3 bucket. Contained within this CSV file you will have access to data such as the decision events, view activations, and conversion events so you can do your own data analysis outside of the Optimizely platform. This file will then get updated every 24 hours with all the latest data.

You can enable the event export by contacting support and they will enable everything and give you your s3 connection details.

One tool to do your offsite data analysis is Google Clouds BigQuery. BigQuery can be configured to import a CSV file into a custom BigQuery table. By scheduling the Optimizely data to be copied into BigQuery every 24 hours you will then be free to do whatever you want with that data in BigQuery.

The final piece of the puzzle is how do we sync the CSV file from the S3 bucket into BigQuery. This is where Google Cloud Platform (GCP) comes into play. Using services like the Cloud Storage Transfer Service you can copy the CSV into Cloud storage where BigQuery can ingest it. An even easier way is to enable a data transfer within BigQuery itself!

To transfer a CSV within BigQuery, select Data Transfer ➡ Transfer Service from the sidebar. Select Amazon S3 Bucket. Enter the bucket's name, and paste in the access key ID:

In order to copy the data contained within the Optimizely CSV file into BigQuery, you will need to create a new dataset. You can do that from the Destination section within the import screen. You should also set the service to run every 24 hours so BigQuery will automatically get all new updates made within Optimizely. This can be done from the Schedule options section. With this data sync enabled, you should now have easy access to your Optimizely experimentation data in BigQuery!

As you can see the effort to get these two platforms to talk to each other is minimal. As long as you have the correct connection details the process should take less than 15 minutes. Happy Coding 🤘